- This event has passed.

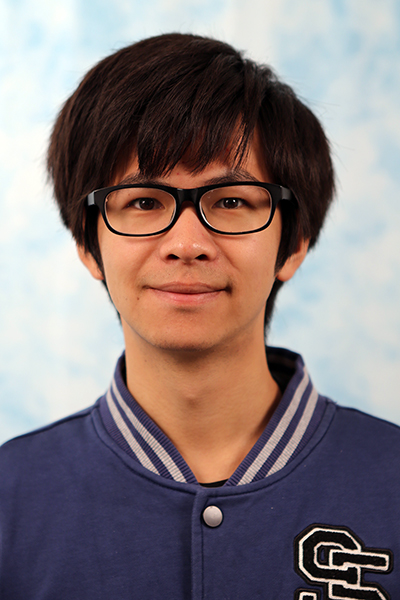

Fall 2023 GRASP Seminar: Donglai Xiang, Carnegie Mellon University, “Modeling Dynamic Clothing for Data-Driven Photorealistic Avatars”

October 17, 2023 @ 1:30 pm - 2:30 pm

This is a hybrid event with in-person attendance in Levine 512 and virtual attendance on Zoom.

ABSTRACT

In this talk, I will present research on building photorealistic avatars of humans wearing complex clothing in a data-driven manner. Such avatars will be a critical technology to enable future applications such as VR/AR and virtual content creation. Loose-fitting clothing poses a significant challenge for avatar modeling due to its large deformation space. We address the challenge by unifying three components of avatar modeling: model-based statistical prior from pre-captured data, physics-based prior from simulation, and real-time measurement from sparse sensor input. First, we introduce a separate two-layer representation that allows us to disentangle the dynamics between the pose-driven body part and temporally-dependent clothing part. Second, we further combine physics-based cloth simulation with a physics-inspired neural rendering model to generate rich and natural dynamics and appearance even for challenging clothing such as a skirt and a dress. Last, we go beyond pose-driven animation and incorporate online sensor input into the avatars to achieve more faithful telepresence of clothing.