- This event has passed.

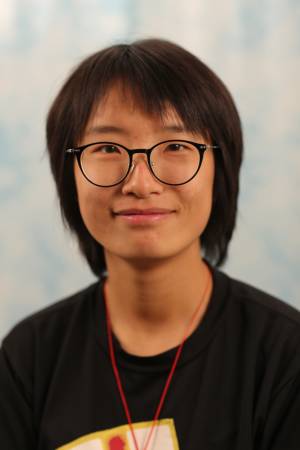

Fall 2023 GRASP Seminar: Yufei Ye, Carnegie Mellon University, “Predicting and Reconstructing Everyday Human Interactions”

December 12, 2023 @ 1:15 pm - 2:15 pm

*This seminar will be held in-person in Levine 512 with virtual attendance via Zoom. The seminar will NOT be recorded.

ABSTRACT

In this talk, I will discuss about building computer vision system that understands everyday human interactions with rich spatial information, in particular hand-object interactions (HOI). Such systems can benefit VR/AR to perceive reality and to modify its virtual twin, and robotics to learn manipulation by watching humans. Previous methods are limited to constrained lab environments or pre-selected objects with known 3D shapes. My works explore learning general interaction priors from large-scale data that can generalize to novel everyday scenes for both perception and prediction.

The talk consists of two parts. The first part focuses on HOI prediction — predicting plausible human grasps for any objects. We found that image synthesis serves as a shortcut for 3D prediction for better generalization. The second part focuses on reconstructing interactions in 3D space for generic objects by leveraging data-driven prior, including from single images and everyday video clips.